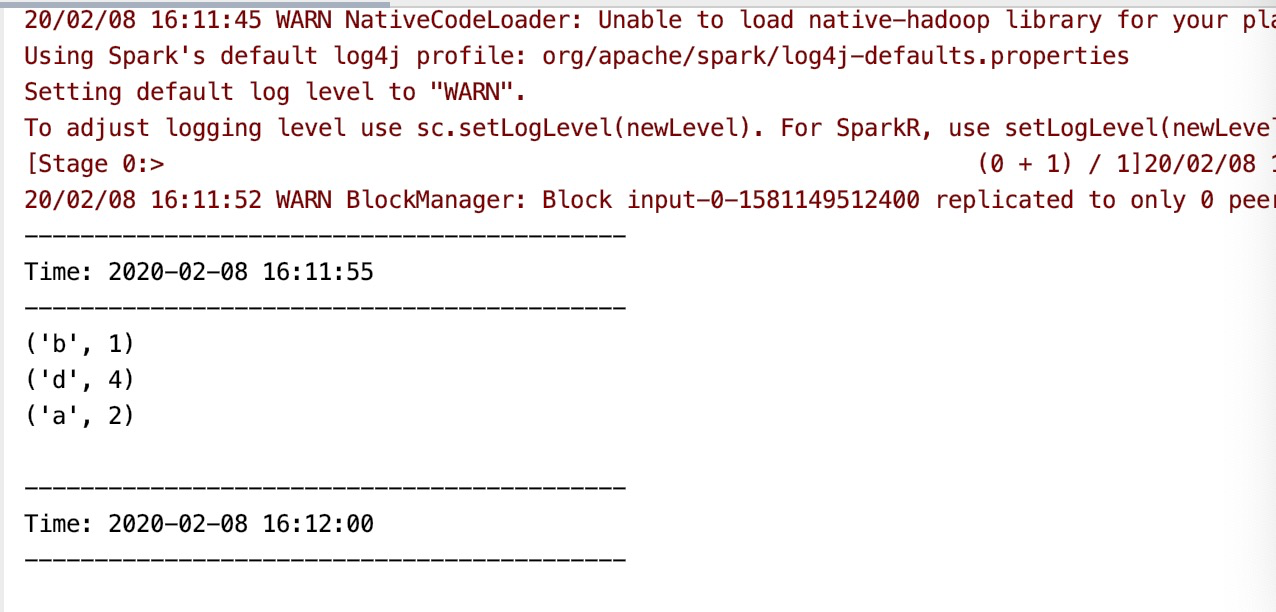

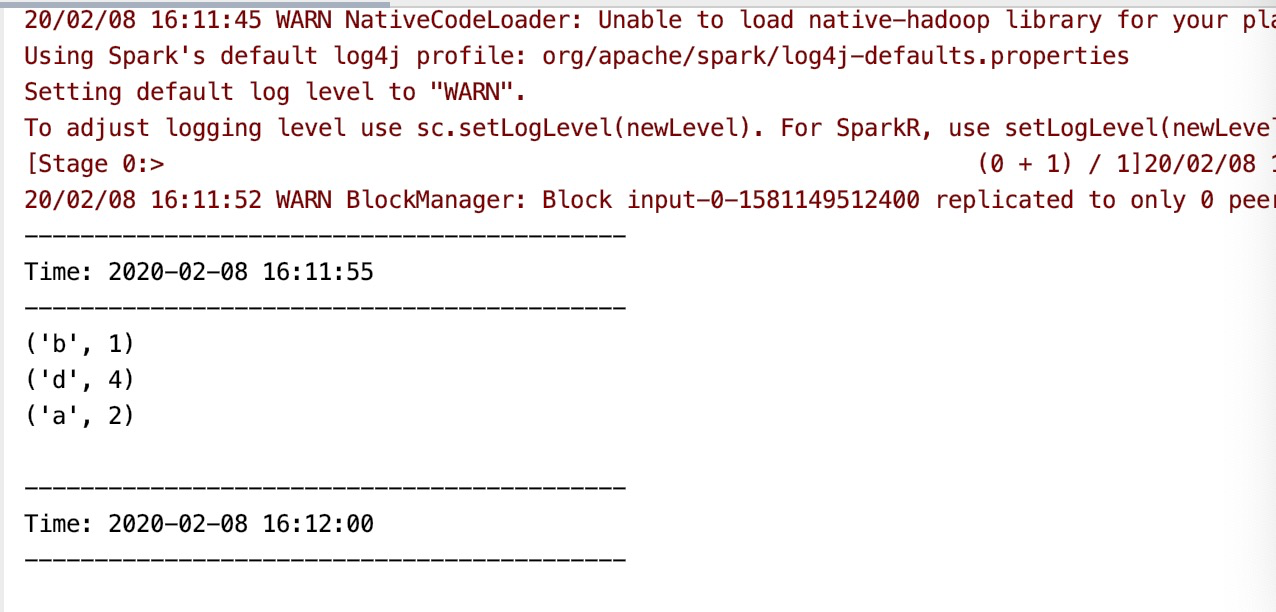

PYTHONPATH=%SPARK_HOME%\python %PYTHONPATH% I added the following environment variables using Settings > Edit environment variables for your account: SPARK_HOME=C:\Programming\spark-2.0.1-bin-hadoop2.7

#HOW TO INSTALL PYSPARK PY4J ON MAC WINDOWS 10#

On Windows 10 the following worked for me. Print ("error importing spark modules", e) To get rid of ImportError: No module named py4j.java_gateway, you need to add following lines: import os Os.environ = spark_main_opts + " pyspark-shell"

Your script can therefore have something like this: from pyspark.sql import SparkSession

Start the instance, which just requires you to call getOrCreate() from the builder object. To make things cleaner and safer you can set it from within Python itself, and spark will read it when starting. For main options (like -master, or -driver-mem) for the moment you can set them by writing to the PYSPARK_SUBMIT_ARGS environment variable. For spark configurations as you'd normally set with -conf they are defined with a config object (or string configs) in. Set the parameters of spark instance from your script (those that used to be passed to pyspark). As already discussed either add the spark/python dir to PYTHONPATH or directly install pyspark using pip install. Ensure the pyspark package can be found by the Python interpreter. This is especially interesting when spark scripts start to become more complex and eventually receive their own args. master XĪlternatively, it is possible to bypass these scripts and run your spark application directly in the python interpreter like python myscript.py. they set up your PYTHONPATH, PATH, etc, so that your script can find pyspark, and they also start the spark instance, configuring according to your params, e.g. When launching things with spark-submit or pyspark, these scripts will take care of both, i.e. Replace the "1.2.0" with the actual apache-spark version on your mac.įor a Spark execution in pyspark two components are required to work together: Then, I set the PYTHONPATH this way so the Python import works: export SPARK_HOME=/usr/local/Cellar/apache-spark/1.2.0 On Mac, I use Homebrew to install Spark (formula "apache-spark"). So, if you don't want to type these everytime you want to fire up the Python shell, you might want to add it to your. PYTHONPATH=$SPARK_HOME/python/lib/py4j-0.8.2.1-src.zip:$PYTHONPATH Please add $SPARK_HOME/python/build to PYTHONPATH: export SPARK_HOME=/Users/pzhang/apps/spark-1.1.0-bin-hadoop2.4Įxport PYTHONPATH=$SPARK_HOME/python:$SPARK_HOME/python/build:$PYTHONPATHĭon't run your py file as: python filename.pyīy exporting the SPARK path and the Py4j path, it started to work: export SPARK_HOME=/usr/local/Cellar/apache-spark/1.5.1Įxport PYTHONPATH=$SPARK_HOME/libexec/python:$SPARK_HOME/libexec/python/build:$PYTHONPATH ImportError: No module named py4j.java_gateway Import the necessary modules from pyspark import SparkContext Go to your python shell pip install findspark Here is a simple method (If you don't bother about how it works!!!) Spark is downloaded on your system and you have an environment variable SPARK_HOME pointing to it.

bashrc file and the modules are now correctly found!

# Add the PySpark classes to the Python path:Įxport PYTHONPATH=$SPARK_HOME/python/:$PYTHONPATH Check out $SPARK_HOME/bin/pyspark : export SPARK_HOME=/some/path/to/apache-spark Turns out that the pyspark bin is LOADING python and automatically loading the correct library paths.

0 kommentar(er)

0 kommentar(er)